Share

Author: Espai Societat Oberta + Canòdrom + Algorights

Algorithmic justice, automated decision-making systems, human rights, and inequalities. During her visit to Barcelona in October, the American writer, political scientist, and professor Virginia Eubanks took part in different meetings in which she stressed there is no algorithmic neutrality and insisted on the need to involve the people affected in the fight against discriminatory technologies.

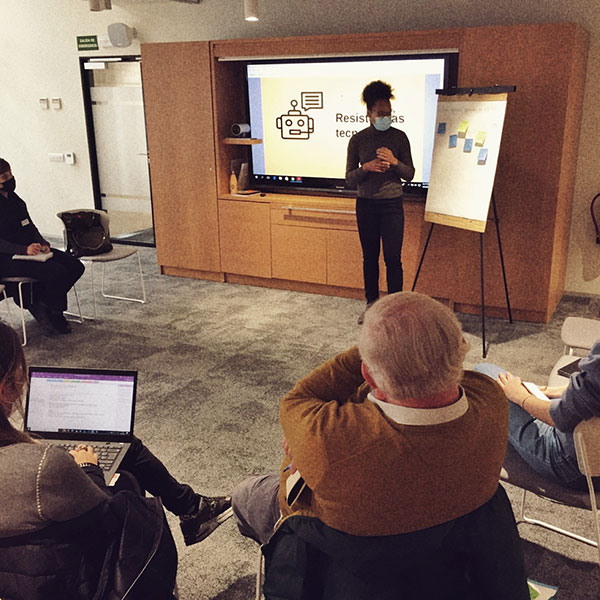

Eubanks met with activists at the Ateneu del Raval and also gave a talk open to the public at the Canòdrom – Ateneu per la Innovació. Both meetings were co-organised by Espai Societat Oberta together with Algorights and the Canòdrom, where Eubanks shared her research and conclusions about automated systems on social rights.

Personal stories

Eubanks believes in personal stories to bring her research closer to a diverse audience. In fact, her book ‘The Automation of Inequality’ compiles several of these stories. In her conversation with Jordi Vaquer(Open Society Foundations), Lina María González (Algorights) and Oyidiya Oji Palino (Catàrsia), Eubanks explained some of these stories and highlighted what they had in common. In addition, Virginia Eubanks’ participation in the Canòdrom left several headlines and here we share four that seems key to understanding her work:

- Inequality is structural, not an individual issue.

- Automated administration must be investigated.

- It is necessary to involve the people affected in struggles

- Sometimes solutions come from people and social movements.

One of the fundamental points of Eubanks’ research and approach is the idea of fairness, of biases. Technology and automated systems are often presented as neutral, and algorithms learn to make biased decisions that become ‘permanent and legitimate’. Jordi Vaquer wanted to ask her about this, also emphasising how the population excluded in terms of housing or work is also excluded when we talk about access to technology.

Violences and global network

“How do we show that automation amplifies the suffering of the people they are supposed to help?” asked Oyidiya Oji Palino, a member of the Asian descent collective Catàrsia. “Economic violence is violence”, Eubanks recalled. “We have to think that people in situations of social exclusion have their own voice and things to say and contribute to finding solutions, beyond an algorithm”, she added in response to Oji Palino.

As the voice of Algorights collective, Lina María González wanted to ask Eubanks how she imagined the creation of a global network in which civil society has a voice and protects itself from biases and inequalities caused by automated systems that are repeated in different countries. To this question, Eubanks answered that fortunately, she is finding more and more conversations around algorithmic injustice. Despite this, the author regrets that two aspects of this struggle are missing: more presence of the voices of the people affected and more interest in global conversations to bring together issues of technology and social justice around the world.

Finally, Eubanks recalled the importance of being vigilant with Maths Washing, since there is a certain ‘tendency to not want to understand what automated systems are about because they have sold the story that it is impossible to understand and it is not. I hope that people are interested in learning and defending their rights’.

In the closed-door meeting with activists, the author of ‘The Automation of Inequality’ shared with the group her motivations for writing the book. “I try to explain the problems we face with technology and the challenges of the people who are most affected. I also try to understand the systems and their behaviour, tell stories from the field and make people understand that the future is not so far away and that technology is already affecting our lives,” said Eubanks.

The writer also regretted that technology is receiving ‘too much attention’ and that different social contexts are being neglected. In addition, Eubanks warned the group of activists she met that she had observed patterns of behaviour between the US and Spain. ‘I think events like today are really important to share what is happening and understand other cases. Also because some systems that are already used in other countries will be implemented in others sooner or later. We have to identify what is happening in our communities, learn how it affects us and how to combat it’,she added.

Eubanks framed the visit and her talks in the context of a new project she is working on. It is about collecting new stories related to automated decisions and social services. “I’m particularly interested in everything that has to do with the minimum vital income because in the US they think this could solve all the problems and here I’m seeing that it might not be true,” she confessed. “I am collecting stories in 12 countries and I must warn that none of the decision-making systems is neutral,” concluded Eubanks.